Are you struggling with the cryptic world of character encoding, facing a digital Tower of Babel where languages clash and information vanishes into a jumbled mess of symbols? The seemingly simple act of displaying text on a screen can be a complex dance of standards, and understanding these underlying principles is essential to navigate the digital landscape.

The digital realm, built upon the binary foundation of 0s and 1s, necessitates a system to represent characters – the building blocks of language. This is where character encoding comes into play. It is a code that assigns each character (letters, numbers, symbols) a unique numerical value. These numbers are then translated into the binary language that computers understand, allowing them to store, process, and display text. One of the most widely used and versatile character encodings is UTF-8, a variable-width encoding that supports the vast majority of characters from various languages.

Consider the humble ç (latin small letter c with cedilla), the è (latin small letter e with grave), or the é (latin small letter e with acute). Each of these, and countless others, is assigned a specific code point in the Unicode standard and can be represented by their HTML entity, such as ç, è, and é, respectively. This system allows computers to render these characters correctly, regardless of the underlying system or platform.

Delving deeper into the intricacies of language, we encounter concepts such as jotovanje, a phonetic change prominent in certain Slavic languages. The essence of jotovanje is the fusion of the consonant j with preceding consonants, resulting in specific palatalized sounds. For example, the verb vezati (to tie) transforms to vežem (I tie) through jotovanje. This shift demonstrates the dynamic and evolving nature of language, as sounds morph and adapt over time. But what happens when these seemingly simple, fundamental principles are obscured by technical errors? What happens when text becomes corrupted?

The challenge of dealing with garbled text is a common and frustrating one. When text appears as a series of unintelligible symbols, it often indicates a mismatch between the character encoding used to store the text and the encoding used to display it. The culprit can be a variety of things, from improper file saves to incorrectly configured web servers. Deciphering this encoded message requires an understanding of which encoding was used in the first place and how to reverse the process. Online tools can help to resolve this issue by decoding the mixed characters and displaying the content in its correct format.

Let's explore an example of the Serbian language and the importance of understanding its phonetic nuances. The Serbian language, like other Slavic languages, possesses distinct sounds that can be tricky for learners. The pronunciation of characters like Č, Ć, Dž, Đ, Ž, and Š often present a hurdle, as these sounds do not exist in the English language. The difference between C, Ć, and Č is also critical, since they are often confused. These sounds play a vital role in distinguishing words and communicating effectively. Mastery of these sounds can be facilitated through dedicated lessons and through interactive lessons.

Furthermore, the concept of Jednačenje suglasnika po zvučnosti (assimilation of consonants by voicing) is key to understanding Serbian phonology. This phenomenon explains how voiceless consonants (c, č, ć, f, h, k, p, s, š, t) can change to their voiced counterparts (b-p, d-t, dž-č, đ-ć, g-k, z-s, ž-š) depending on their proximity to other sounds. This highlights the interconnectedness of sounds within a language and how they influence each other.

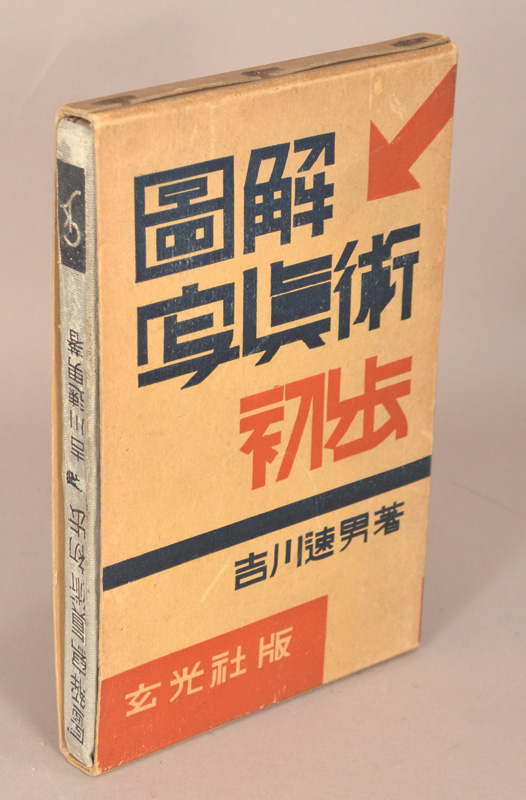

One particularly challenging scenario arises when dealing with broken Chinese or Unicode characters. When text appears as sequences like 具有éœé›»ç¢çŸè£ç½®ä¹‹å½±åƒè¼¸å…¥è£ç½®, it points to a problem with character encoding. The original text was likely encoded using a specific character set (e.g., UTF-8), but the decoding process is failing. The remedy involves identifying the correct encoding and reinterpreting the bytes accordingly. The meta tag `` is one way to specify the character encoding for a web page, helping browsers interpret the text correctly.

The issues of character encoding and text corruption are not limited to a single language or system. They are a universal problem that transcends boundaries. It's therefore crucial to understand the basic principles and strategies for dealing with these challenges. By understanding the intricacies of character encoding, the principles of language, and the tools available to us, we can effectively navigate the complex world of digital text and ensure that information is preserved and accurately presented.

Here's a summary table on the common mistakes that leads to these issues.

| Problem | Description | Cause | Solution |

|---|---|---|---|

| Garbled Text | Unintelligible characters | Mismatch between encoding used to save text and the one used to display it. | Identify the correct encoding and ensure it's used for display. |

| Incorrect Pronunciation | Misunderstanding of sounds. | Lack of familiarity with phonetics of the language | Dedicated study of the language's phonetic system. |

| Broken Chinese characters | Characters do not appear correctly. | Incorrect character encoding when displaying text. | Ensure the text is displayed using the correct character encoding. |

In conclusion, understanding character encoding and the nuances of language is essential for accurate and effective communication in the digital age. It is important to develop the knowledge of these crucial principles to navigate the digital landscape.