Have you ever encountered a wall of indecipherable characters, a digital puzzle where words become meaningless symbols? The phenomenon of garbled text, a frustration familiar to anyone who's navigated the complexities of the internet, stems from the clash between how information is encoded and how it's interpreted.

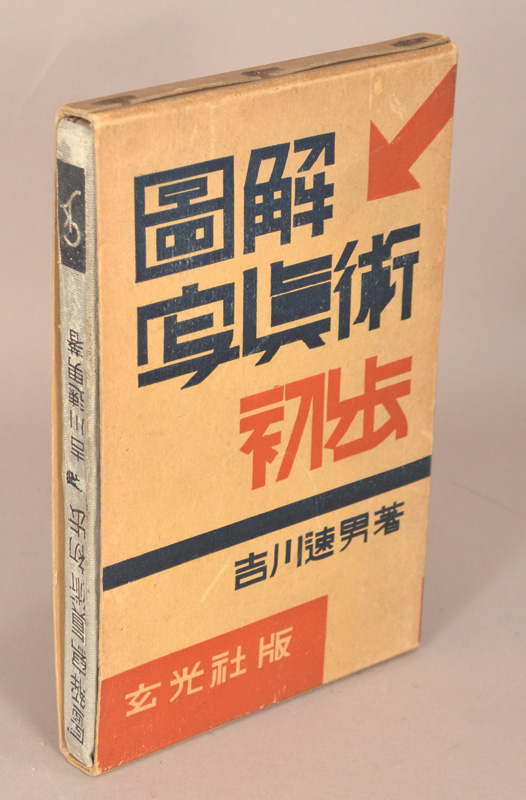

The digital world relies on a universal language: character encoding. These systems dictate how letters, numbers, and symbols are translated into the binary code computers understand. When this translation goes awry, when the encoding used to create the text doesn't match the one used to read it, the result is often a chaotic jumble of seemingly random glyphs—what we commonly refer to as mojibake or, in the context of Chinese characters, a perplexing series of unreadable characters.

Let's delve into the intricacies of this issue, exploring its causes, manifestations, and potential solutions. The problem often arises when data is transmitted or stored with one encoding scheme, but then displayed with another. This mismatch can occur in a variety of contexts, from email communications to database entries, website content, and even the display of software interfaces. A common culprit is the failure to specify or correctly identify the encoding of a text file, leading the software to make an educated guess—often an incorrect one—about how to interpret the data. Sometimes the issue stems from an underlying technical problem that involves the way computer systems store and process character data.

| Aspect | Details |

|---|---|

| Common Term | Moji-bake (Japanese), Garbage characters, or the more general character encoding error |

| Definition | Incorrect rendering of text due to a mismatch between the encoding used to create the text and the encoding used to display it. |

| Manifestations | Unreadable symbols, question marks, boxes, or other unexpected characters. Specific patterns depend on the initial encoding and the erroneous decoding method. |

| Causes |

|

| Common Encodings Involved | UTF-8 (the most common for the web), ISO-8859-1 (Latin-1), Windows-1252, GB2312, Big5. |

| Consequences | Loss of meaning, difficulty in reading and understanding text, potential data corruption. |

| Solutions |

|

| Resources | Wikipedia - Mojibake |

Consider the term çµåŠ›ç»žç›˜, a Chinese phrase that, when displayed correctly, conveys a specific meaning, but appears as a meaningless string of characters if encoding is mismatched. The phrase, like other Chinese characters, can be particularly susceptible to encoding errors because of the vast number of characters and the multiple encoding systems designed to support them. In some cases, the source text was encoded using GB2312 or Big5, commonly used in mainland China and Taiwan, respectively, and the browser or software attempts to render it using UTF-8, the dominant encoding on the internet today. In other instances, the original document was created in UTF-8 but has since been converted to a different encoding, which is another common reason for the appearance of such characters.

A frequent source of confusion arises from the historical use of various encoding standards. Windows-1252, for example, is an 8-bit character encoding used by default in older Windows operating systems. ISO-8859-1, also known as Latin-1, is another 8-bit encoding that covers many Western European languages. UTF-8, on the other hand, is a variable-width encoding capable of representing all characters in Unicode, making it the preferred choice for the modern web. The compatibility, or lack thereof, among these encodings is a significant factor in the occurrence of moji-bake.

The article Unicode 中文乱码速查表 illustrates the problem clearly, pointing out the types of errors that can occur and the reasons behind them. Many systems make incorrect guesses, which is the primary reason that users confront with such problems. When dealing with problematic text, the first step is always to determine the correct encoding. There are online tools that can help with this identification process by analyzing the sequence of characters and comparing them with known encoding patterns. In cases where the correct encoding is unknown, the process can involve trial and error.

Once the correct encoding is identified, the next step is to convert the text to the desired encoding. This can be done using text editors, programming languages, or online converters. For instance, if text is incorrectly encoded as Windows-1252, it can be converted to UTF-8 to ensure it displays correctly on most modern browsers and systems. Another crucial tip is to ensure that all components of the process, from data entry to display, are using the same encoding. In databases, the encoding settings for the database itself, the tables, and the individual columns must all be consistent. If you're retrieving data from an API, verify that the API is sending the data with the correct encoding declaration.

Another example of this issue, is é ç ¹å»ºè®¾å å ¬å®¤, if viewed with the wrong encoding, will appear as a collection of nonsensical characters. The goal is to recover the original meaning, which can often be achieved by selecting the appropriate encoding in a text editor, web browser, or online conversion tool. In extreme cases, where the data has been corrupted and the original encoding is unknown, recovering the original content becomes more difficult, sometimes impossible. This is why maintaining data integrity and correct encoding practices are paramount.

Consider the importance of proper character encoding when working with any international language. The Unicode Consortium develops and maintains the Unicode standard, which aims to provide a unique code for every character used in all the world's writing systems. UTF-8 is the most commonly used encoding for Unicode on the World Wide Web, as it supports all of the characters necessary for all languages. Knowing the character encodings can help you to diagnose and fix problems. For example, the How to encode and decode Broken Chinese/Unicode characters? points out that the problem frequently arises from an encoding mismatch, such as when a text is decoded as Windows-1252 rather than the intended UTF-8.

The article ISO-8859-1 (ISO Latin 1) Character Encoding offers insights into the practical aspects of character encoding. This standard covers a range of characters, including those commonly used in Western European languages. If a document is supposed to be encoded in ISO-8859-1 and is displayed as gibberish, it indicates that the viewing system is using a different encoding. This frequently causes problems for characters outside the standard ASCII character set.

The Unicode Table - Complete list of Unicode characters serves as a valuable reference, providing a comprehensive list of characters and symbols. It is useful for both understanding and debugging the appearance of moji-bake. From the perspective of an end-user, they are able to see how characters should appear.

Tools for resolving these issues are also available. Online utilities offer a quick way to recover text that is corrupted and a way to diagnose an issue with the characters. It is important to remember that the success depends on being able to identify the correct encoding or the closest approximation to it.

In short, understanding character encoding is crucial in the digital age. From the simple act of writing an email to the complex task of building a website, character encoding lies at the heart of how we communicate. When encoding goes wrong, the result is not just inconvenient; it's a barrier to communication. Taking the time to learn about character encodings, to implement them correctly, and to troubleshoot encoding issues can save time, reduce frustration, and ensure that information is communicated accurately and effectively. The ability to quickly identify, understand, and resolve moji-bake issues is an increasingly valuable skill, ensuring that the digital world we inhabit remains a place where words, symbols, and data can be understood by all.